Final Milestone

Second Milestone

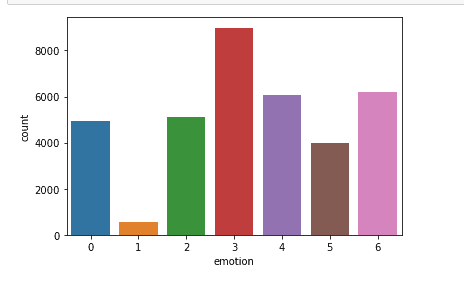

As I began to pre-process my data, I noticed that there was an imbalance in the dataset. Using MatPlotLib and Pandas, I quickly realized that the three most common emotions within the dataset were ‘neutral,’ ‘happiness,’ and ‘sadness.’ In the image below, a graph of the total number of emotions per image is shown. 0 is classified as ‘anger.’ 1 is classified as ‘disgust.’ 2 is classified as ‘fear.’ 3 is classified as ‘happiness.’ 4 is classified as ‘sadness.’ 5 is classified as ‘surprise.’ 6 is classified as ‘neutral.’

The other four emotions in the dataset were very hard to find. At this time, I had also realized that to accurately predict multiple emotions would be quite difficult because everyone displays their emotions very differently. With the lack of images that the FER-2013 dataset had in terms of emotions other than ‘neutral,’ ‘happiness,’ or ‘sadness,’ I decided to shorten the dataset. The new dataset that I had created only contained the following three emotions: ‘neutral,’ ‘happiness,’ ‘sadness.’

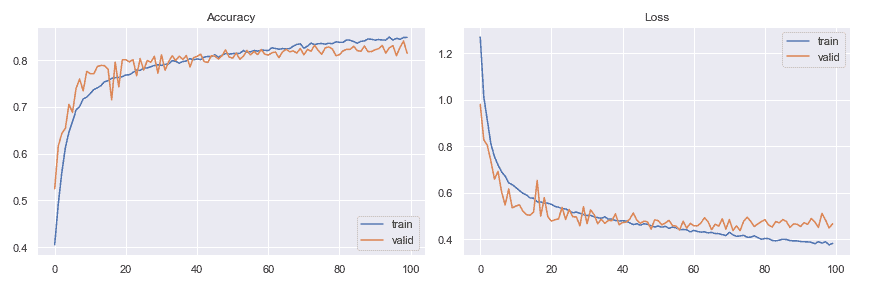

According to the image below, my model performed very well. The accuracy value over each epoch increased with the loss value decreasing over each epoch.

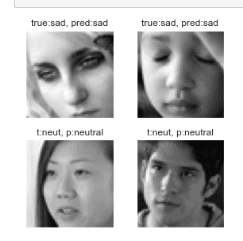

Although the performance of the model turned out to be numerically amazing, I still wanted to analyze the model with the testing set. Using Tensorflow/Keras’ built-in predict_classes function, I inputted images from the testing set into the model. Along with using Tensorflow/Keras, I also used MatPlotLib to plot the images, the true emotion of the image, and the predicted image of the model. According to the image below, the model predicted each emotion accurately.