Wall-E Emotive Robot

If robots are inherently artificial, how can we replicate the nuances of human emotion through simple gestures and cues? The movie Wall-E suggested the human ability to empathize with a robot character on the big screen, so I applied the same principles to a real-life robot.

Engineer

Nell F

Area of Interest

Mechanical Engineering and Industrial Design

Menlo Atherton High School

Grade

Rising Senior

Demo Night

Final Milestone

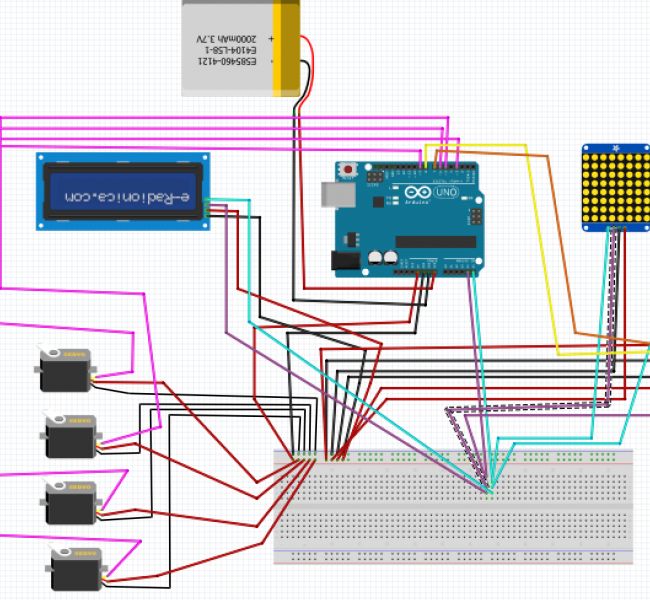

For my Final Milestone, I completed the assembly and programming of my Wall-E emotive robot. In the end, my project used two servos for the head motion, two mini servos for the eyebrow expression, two LED matrices connected on a perf board for the eyes, an LCD for text display, an ultrasonic sensor for object detection, a 6v battery, and an arduino uno to control the peices.

One of my biggest challenges was getting my coding to work together consistently. There were so many components that I had to be very meticiulous in ensuring that non of my if statements contradicted themselves.

I also added commands to control my robot’s actions from the serial monitor on my computer. In the future, I would like to add a bluetooth or radio component to allow for remote control capabalities.

For Bill of Materials and Code click here

Through Bluestamp, I learned how accessible enginnering really is. You don’t need thousands of dollars and a team programmers to accomplish amazing things. With 6 weeks, $200, and no coding experience, I was able to build this robot. I look forward to seeing what I else I build in the future.

Second Milestone

For my second milestone, I got all my different components working in a circuit. I also connected both LEDs to a perf board to consolidate their eight pins into four. It took forever to get the head structure assembled because I needed to troubleshoot how to fit all the components together in such a small space. I also had to adjust my LCD so that it would not clear everytime the ultrasonic sensor updated (which was causing to constntly blink and flicker). Now I have to assemble everything together and program the actual emotion commands.

First Milestone

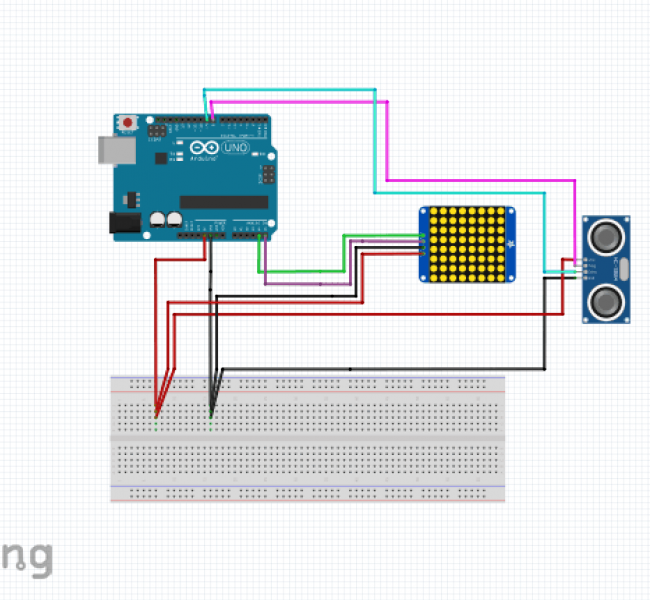

For the first milestone of my Wall-E Emotive robot, my goal was to successfully program two of the Arduino components used in my project and then program them to interact with one another. The components I chose were the 8×8 LED matrix, which would eventually be used to display the robot’s eyes, and the Ultrasonic Sensor, which would allow the robot to determine how far away an object is.

The 8×8 LED Matrix works by running an 8×8 grid of LED dots that are connected to a total of 16 pins. This means each LED dot is positioned at the intersection of two pins. When a positive charge runs through one wire and a negative through the other, it completes the  circuit and causes the specific dot to light up. This can be controlled through simple on/off commands. However, because every row and column share their wires with 7 other intersections, it is impossible to have more than a few lights on without causing unwanted lights to be on. This issue can be resolved by passing the display as an array, rather than a static image. This means that only one row of lights will turn on at a time, but the matrix will run through each row so quickly that to a human, it looks like there is one continuous image being displayed.

circuit and causes the specific dot to light up. This can be controlled through simple on/off commands. However, because every row and column share their wires with 7 other intersections, it is impossible to have more than a few lights on without causing unwanted lights to be on. This issue can be resolved by passing the display as an array, rather than a static image. This means that only one row of lights will turn on at a time, but the matrix will run through each row so quickly that to a human, it looks like there is one continuous image being displayed.

The other appliance I have working so far is my ultrasonic sensor. This appliance had two sensors attached that look like mini speakers: one is for output and the other is for input. Essentially, the output sensor emits a sound wave signal that will then bounce off of any object in front of the sensor that is within range. When the waves bounce of the object, the wave signal bounces back sensor and is detected by the input. While this is happening, the computer is timing how long it takes for the signal to go and come back. It then takes this increment of time and multiplies it by the speed of sound, computing the total distance that the signal travelled. This distance in then divided by two in order to determine how far away the object itself is.

For my milestone, I programmed the LED matrix to display a frowny face, but to flip to a happy face if the ultrasonic sensor detects an object within 10 cm of the sensor (this number is arbitrary and can easily be adjusted as desired). In order to do that, I had to set up each appliance to interact with one another, which I did by setting up an if-else statement where the LED matrix responds in different ways depending on the results of the ultrasonic sensor.

One challenge I faced in this process was getting acquainted with all the components. I had never worked with any of the appliances or an Arduino before, and I had never worked with C or C++. Some of the challenges this presented included not having the natural intuition for these components: for example, I didn’t realize I needed to put a backpack on my LED in order to properly connect it to my Arduino. I also didn’t realize that for my conditional statement I needed both an “if” and an “else” (ie “if this happens, then do that, or else do this other thing”). At first, I was trying to program my LED where there was only a function that defined what would happen if the sensor detected an object within the set distance. Soon, I realized I needed a complementary function that would define what the LED should do in the absence of an object.

Starter Project

For my starter project I chose to build the Useless Machine. Essentially, the exact function of the machine is to flip a switch. The way you activate said function is by flipping said switch. The machine undoes the exact action done to it. Hence: Useless.

The machine works by running a circuit which connects a battery pack, a motor, some resistors, a reverse polarity switch, a switch break, and an LED. As electrons flow through the circuit in a particular direction, the motor rotates clockwise until the arm (which is operated by the motor) hits the switch, causing it to flip. The flip of the switch caused a manual redirection of the wires within the circuit, effectively reversing the polarity (or changing the direction of the flow of electrons). Reversing the polarity causes the motor to run counterclockwise and the arm to descend back into the box until it hits the switch break, which cuts the circuit off so the motor does not continue to rotate unnecessarily.

While all of this is happening, the LED is also changing color. The LED, which is inherently a diode, has two inputs potentials within the circuit, but only one output wire which exists as the ground. In order to complete the circuit, the negative charge will run through one of these particular inputs. The negative charge is runs through one side, and the yield is a green light, or it runs through the other, and the light is red. The color change occurs when the reverse polarity switch is activated, which accounts for why the negative charge can change which wire it runs through. Like the motor, the LED turns off when the switch break is hit.