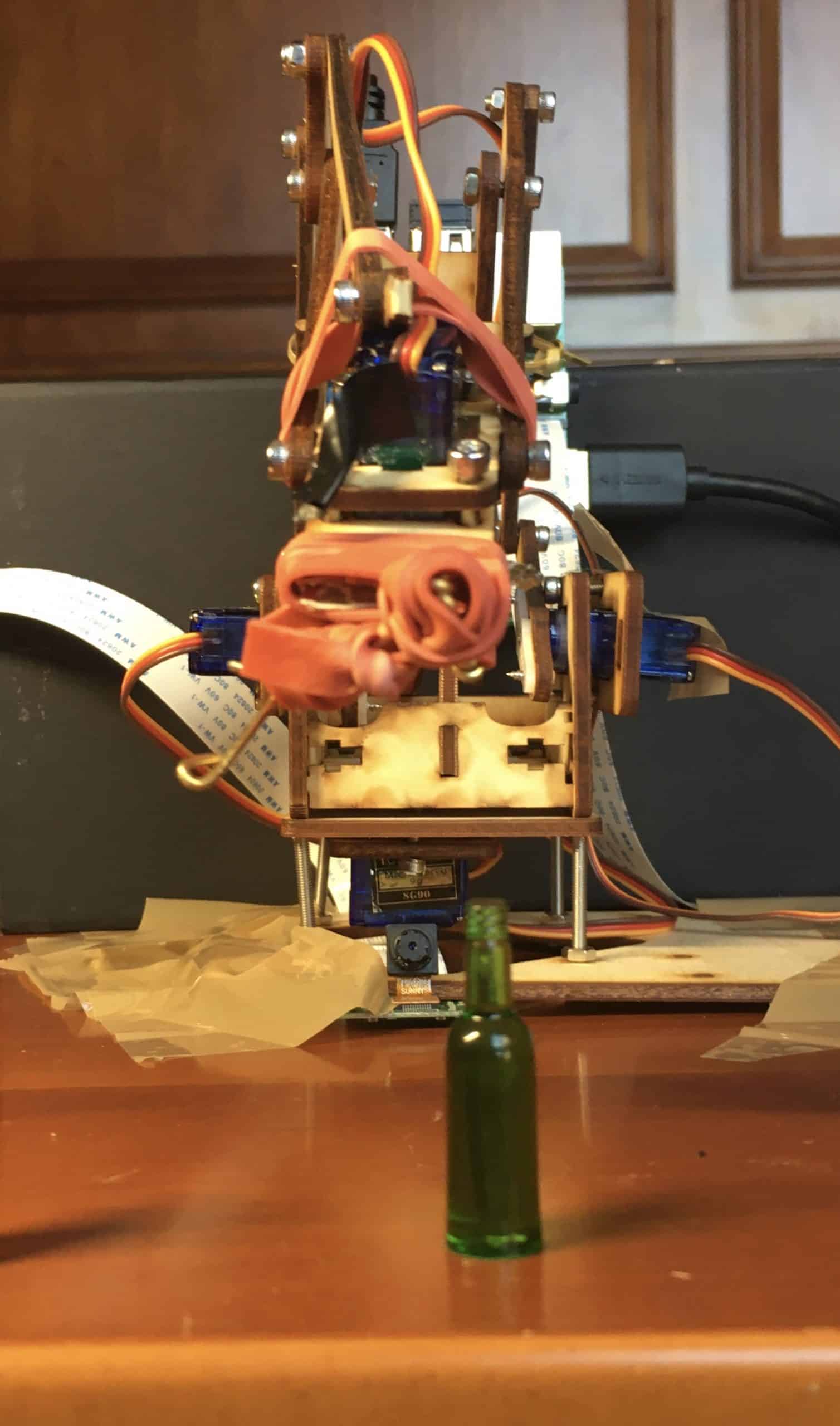

Demonstration

Over the course of this project, I learned a lot about technology for sustainability. I’ve been interested in sustainability for a while; urban sustainability in particular because I’ve grown up in a large city. It was a great experience for me to be able to bring multiple interests together with this project; machine learning, sustainability, and robotics, to name a few. I found glass recycling in particular to be an issue which I don’t often hear brought up. In fact, recycling in general is treated as something that happens in the background rather than an important, and improvable, part of how we take care of the environment around us.

First Milestone

My first milestone for my glass sorting system was to set up the Raspberry Pi and camera and run a pre-trained model. Although I faced challenges setting up the RPi, I was ultimately able to create a program in Python which sent an image to NanoNets, where it would be processed by a pre-trained object detection model and then returned with an identification to be printed to the console. At first the input was an image I’d pulled from the internet which I had to download and call specifically in the code. However, after re-purposing a program I made which could take photos using the RPi camera, the RPi would take a photo of a physical object, such a knife, and return with its identification based on the pre-trained model.

Second Milestone

For my second milestone, I created my customized model on NanoNets, which could tell the difference between different colors of glass bottles, both whole and broken. The colors I have trained it for include green, brown, clear, and blue, but more colors can be added in the future. I found and hand-annotated almost 200 photos, and may add more as I try to increase the accuracy rate. Although I was at 90% accuracy at the time I made this video, I had reached 95% by the time of my demonstration.