Unity 3D Virtual Reality Android Game

My extensive project at BlueStamp is a Virtual Reality game. To start, I will be working on Matthew Hallberg’s Virtual Reality shooter with controller Instructable, and then move on to develop my own Unity 3D project for Android.

Name:

Andrew L.

Year:

Rising Senior

High School:

Cupertino High School

Year of Graduation:

2018

Area of interest:

Computer Science

My Project on Showcase Night

A Look Back at BlueStamp

My time at BlueStamp Engineering has been a blast, and an experience that taught me how to be an independent learner and actively pursue what I love to work on. Going into the program, I wanted to get my feet wet with electrical engineering- I got to do that with my very first day when I was able to solder on my first circuit board. However, I was most excited about the main project I would be working on because of my love of games. Throughout my six weeks, I have given myself the opportunity to face many walls obstructing me from building my project. Struggling with my extensive project caused me to come across the realization that my end goal- whether it be researching virtual reality technology, game development, or something altogether different- is far, far away. But to me, this is great; knowing that I cannot simply go through school (and life in general) with the notion that I will get down to work as soon as I have learned what I need from my educators has been a trigger for me to stop the hand-sitting and start building a reality that 17-year-old me only dreams of.

Materials required for this project

| Item | Quantity |

|---|---|

| ESP8266 ESP-12E NodeMCU | 1 |

| Micro USB to USB cable* | 1 |

| Male to male jumper wires | 6 |

| Four-terminal push buttons | 2 |

| Breadboard (for circuitry not cooking) | 1 |

| LiPo 3.7V battery | 1 |

| Google Cardboard virtual reality mobile headset* | 1 |

| Phone with Android v4.1 or higher* | 1 |

*Note: If you are only making a VR application, these items are all you need.

Third Milestone

Third Milestone Overview

Progress Made

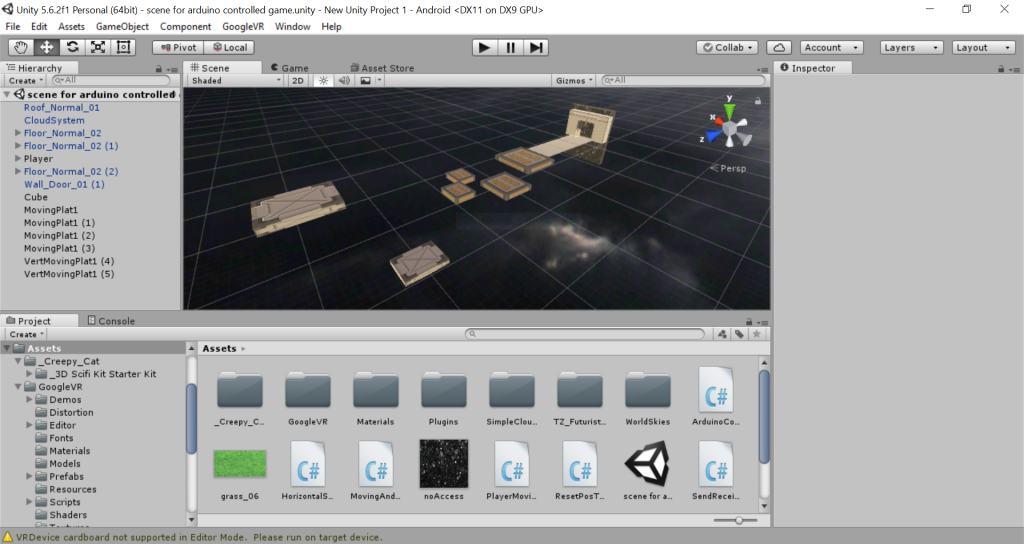

Making the platformer more presentable- using Unity’s Standard Assets

For this next step, I wanted to go back and flesh out some aspects of my platformer application/game, and to make the game have a more simplistic look. I imported Unity’s Standard Assets and used platform prefabs from the Prototyping folder and applied Box Colliders to them to make my new platforms, which look a lot neater than the ones I added textures to. With wall prefabs from the same assets that I imported, I created a simple level with a walled off area for the player to start the game at and platforms that I added my horizontal and vertical moving scripts to. I also added a cartoon-styled skybox, which is a sky background for the game environment. The skybox I used is one of several free skybox assets found on the Unity Asset Store.

Player movement script: auto-walking and position reset

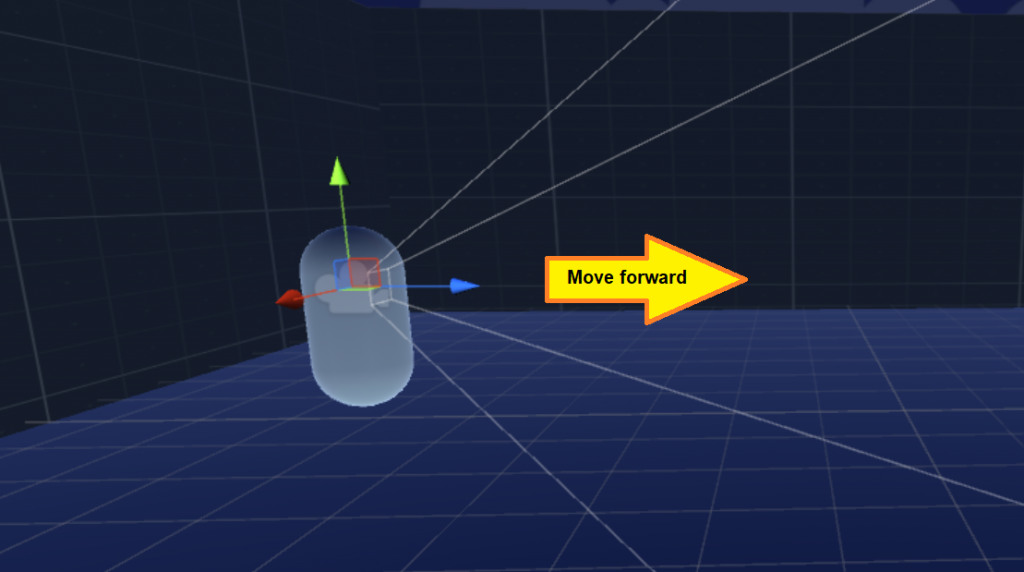

1. Auto-walking

After setting up my environment, I proceeded to make new player movement scripts using parts of what I wrote for my last milestone to better suit the VR platformer game I wanted to make. For this game, I settled for having player controls limited to the player moving their head/phone; due to time constraints, using a controller to control movements such as walking and jumping would have to be set aside because I did not have enough time to learn how to create a working UDP or TCP communication with the application (which would run the server program) and controller (which would run a client program that sends packets of information in TCP to the server) over wifi or other mediums.

I wanted to make my player walk in whatever direction they are looking at, except when looking too high or too low. For walking forward, I recycled code from my old movement script. Then I added public float variables that govern the minimum and maximum x rotation the main camera can be at that would allow for the player to move forward, and then if statements that would check the condition. Checking the condition actually turned out more tricky than I thought it would be, because the rotation values shown in the camera’s Inspector when testing my script are not shown as the values are stored in the code; the camera’s rotation is stored as a float equal to a hundredth of the value shown in the Inspector. Thus, when I wrote:

if(Camera.main.transform.rotation.x >= minXBounds &&

Camera.main.transform.rotation.x <= maxXBounds)

I struggled for a while because I did not understand why my player would not stop moving when looking at an angle greater than my float maxXBounds. After using Debug.Log() to print debug lines to Unity’s console that told me what my main camera’s x rotation was during the game, I figured out that a simple fix was to just check for values in relation on minXBounds * 0.01 and maxXBounds * 0.01.

My auto-walking script can be found on GoogleDrive here: auto-walk script

2. Resetting player position when player is out of bounds

After I got this to work, I wanted to make game testing easier, so I needed a script that resets the player to the starting area when the player GameObject falls too far below the platforms (so I can test run the level without restarting the game). I found how to accomplish this in sluice’s answer at: saving and loading player positions. This also taught me that I can store float values and tag them with a string using PlayerPrefs.SetFloat(). The strings are used to refer to the variable I want to access with PlayerPrefs.GetFloat() later.

Porting my game to my phone as an .apk

With my basic game working, I was ready to build my Unity project as an Android application to my phone. To do so, I followed the official Unity guide available here: building to Android. However, I ran into several problems along the way. After I downloaded the Android command line tools, I was unable to run the android.bat executable file successfully; when I ran it, the command prompt window that should appear showed up but then closed itself quickly. I tried to run the file as administrator, then attempted to use Talha’s solution on StackOverflow. However, my android.bat still could not locate java in my file directory. I ended up downloading the full Android Studio, which includes the command lines. With this, I was able to direct Unity 3D to the location of the Android SDK (command lines) that was included in the Android Studio installation. The last hiccup outside of the Unity guide that I encountered was that for my project, I had to set the minimum Android version for my application to be Kitkat, as GoogleVR is only useable on Android versions of at least Kitkator higher. Once this was dealt with and the appropriate prerequisites for running the VR app were installed on my phone (automatically), I was finally able to see my project working on my phone!

Second Milestone

Second Milestone Overview

Progress Made

Making my first Unity 3D project

After completing Matthew Hallberg’s Instructables project, I went on to create my first Unity project. I started off by downloading and installing Unity 3D, a game development platform used for developing 3D and 2D games. Then, I created a new 3D project and learned how to perform basic workspace maneuvers such as panning and navigating the Scene View, and adding GameObjects to a scene. GameObjects are components in the game environment such as a plane for ground, and the player itself. These do not only encompass objects visible to the player, as Empty objects can be made. Scripts, or programs (on Unity, they are written in C# or Java script) can be attached to these to make the object manage functions such as ending the game when the player messes up. A scene is the workspace that objects can be placed in, and serves as a “level” in many games because the scene in play can be switched to allow a player to progress from one stage to another. The Camera object provides perspectives that the player can see the game through; first person games would have the main camera object within the player’s character and facing the direction the player object’s eyes would be facing.

Setting up the Unity project for a Virtual Reality supported Android build

Since I aimed to make a virtual reality game for Android, I learned that I should import the Google Virtual Reality Software Development Kit (GVR SDK) for Unity v1.60, which I downloaded here: GVR sdk download. The GoogleVR SDK is a Software Development Kit, or a bundle of software tools used for the development of applications, directed at Virtual Reality applications. This SDK comes in a package that has prefabs, or already made GameObjects, that are free to use. The prefabs in this package have scripts attached to them that accomplish tasks such as making a camera object rotate using a phone’s sensors as an input.

After importing the package, I opened up the demo projects within the package to see if I could figure out how the prefabs worked by looking at how the projects were put together. Since multiple prefabs were used in the demos, I wanted to know which ones were the minimum needed to tie camera rotation to phone rotation, as that is all that I will need for my first VR game. After doing some research, I found out that I could not follow almost any guide on using prefabs from GVR SDK because a new version for the SDK had been released. To check the version and changes, I visited Google VR SDK release notes. Many of the prefabs people were using before had been deprecated, or removed, and replaced with new assets that accomplish the same function but in different ways. I found it difficult to find recent tutorials, but eventually found one on Youtube which takes a look at the demos on a version closer to the one I was using (v1.50) and how to set up the Unity project to build to Android: NurFace Games’ v1.50 release overview.

To set up the Unity project for Android, I navigated to File > Build Settings from the top tool bar of Unity and selected Android as my application’s platform and clicked Switch Platform. Then, to set it up for VR, I clicked on the Player Settings button next to “Switch Platform” and checked Virtual Reality Supported under Other Settings in the Player Settings tab that appears in the Inspector window. Then an area with the text “Virtual Reality SDKs will appear below it. Clicking the “+” symbol in this area will allow you to select from several SDKs; I selected Google Cardboard for this project.

Making a Move-able Player (Keyboard and Arduino NodeMCU control)

Moving on, I wanted to create a first person platformer as my first application. From NurfaceGame’s video (the one linked above), I learned that the GVREditorEmulator is the prefab used for creating the first person view with mobile input (rotation with phone movement). Adding a Camera object to the GVREditorEmulator in the inspector sets that Camera as the one that will rotate when the phone rotates. I then made a capsule GameObject as a simple player model and made a Camera (which I tagged as MainCamera) and the GVREditorEmulator as Child objects because they are components of the player. To assign the camera perspective that the GVREditorEmulator will use, I chose my MainCamera in the Camera input area in the GVREditorEmulator’s Inspector.

Making the player moveable with WASD and arrow key movement was my next goal. First, I made the plane in my game environment have a box collider so that the player would not fall through it. Then I wrote a simple script that changed the position of the character object by changing transform.position.x for the x-axis value, and so on if a certain button is down. This script was applied directly to the player object to make button presses change the player object’s position, not another object’s. I tested my code by putting my player on a textured plane so I could see if the player was moving relative to the ground. I found that the player object would tip over because it was a capsule object, so I froze its x, y, and z position and rotation in its Inspector- movement caused by a script changing the position of the object will still work.

Making the jumping script was similar to the walking script, making the player translate up on the y axis when the space bar is pressed with a short cooldown. However, since the jumping motion was updated every frame, my player jumped up extremely quickly. Furthermore, when the player landed on the plane, it tilted and flew away, which prompted me to lock its x, y, and z positions/rotations in its Inspector tab.

Establishing a Serial Communication between my NodeMCU and Unity 3D and making walking dependent on Camera orientation

After I successfully finished with these scripts, I wanted to make my NodeMCU controller control walking forward and jumping. I found a sample script that would allow me to send String to Unity from my NodeMCU through a serial communication in Farneze’s answer here: Arduino to Unity serial coms. I then added if statements to my walking code that made the player’s x position increase when my SerialPort object read a string equal to “walk” and added the same functionality to my jumping script. This ended up working, but I realized that I wanted the player to move in the direction he/she is facing, because they may not be facing the increasing side of the x axis. I found the right direction for writing my script in GargerathSunman’s answer on the Unity forums: Moving in direction camera is facing. I ended up changing the code to depend on a walkSpeed variable rather than my Time setting on Unity.

After implementing that logic, I found that when my player looked up and walked, they would “walk” up. Since I didn’t want this, I saved the player object’s y position as a float before moving and set the y position back to that after moving.

Adding moving platforms

The last thing I finished for this milestone is making several platforms that the player can jump over for a possible parkour simulator. For my horizontal and vertical movement looping scripts that I used for my platforms, I used KellyThomas’ script found here: platform movement loop.

Player movement script (C#) on Google Drive:

movement script with serial communication (reading) with Arduino

Unity game environment

First Milestone

A video demonstration of my prototype controller. The Android Unity application I used for the demo was made by Mattthew Hallberg.

The Arduino sketch for the controller was also made by him, though I added a few test lines. These resources are available at http://www.wirebeings.com/virtual-reality-gun.html.

First Milestone Overview

Progress Made

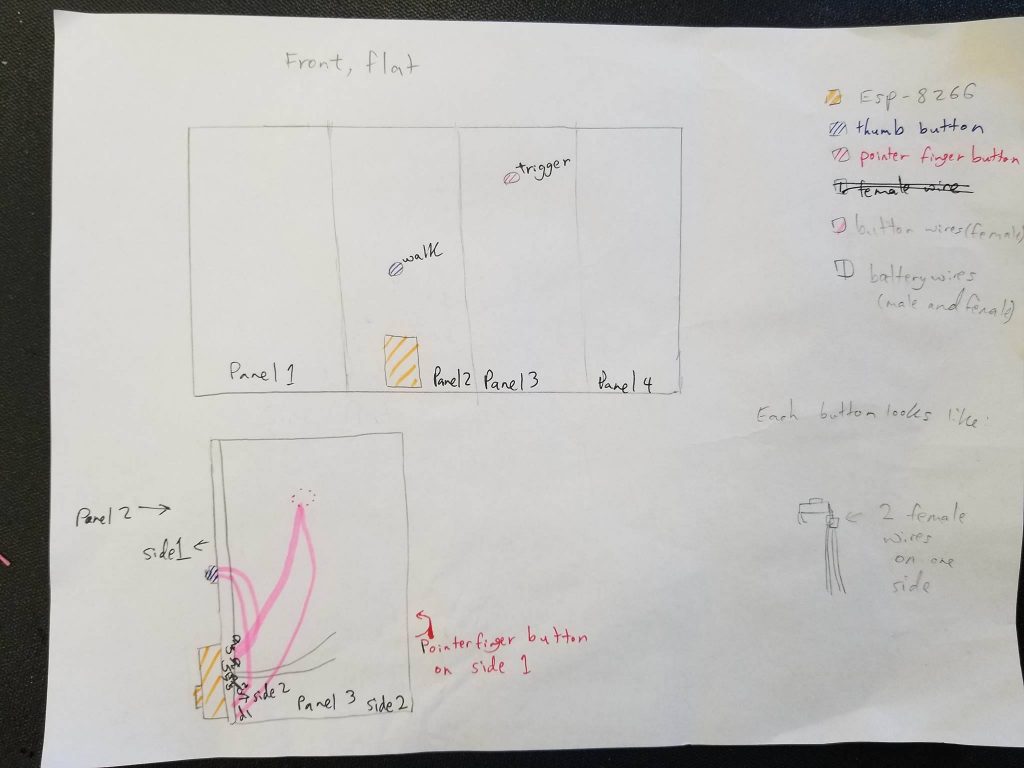

For my first milestone of my VR game/controller project, I focused on making a simple working controller prototype and learn basic 3d modeling skills to use in making a 3d printed controller.

The first step I took was creating a schematic for the controller I was going to make. In order to do this, I had to first learn what connections were necessary between the buttons, NodeMCU dev board, and power source (battery pack). My first “schematic” was a sketch I drew on paper to familiarize myself with the basic design. I wanted to keep my first controller design very simple, so I settled for a rectangular prism design in my sketch. However, 3D printing the design is not something I am prioritizing and may not be included in this project as I will focus on the software and creating my own game after this milestone.

Obviously, the sketch I drew (right) isn’t very clear, and exists mostly for my reference. Going forward, I looked for a program I could use to make a neater diagram that others could more easily understand. I cycled through several programs before settling on Fritzing, which allows users to select electrical components from an extensive library and drag them onto typical breadboard and schematic setups to make circuit designs quickly. However, I realized that the NodeMCU development kit model from Fritzing’s default library is not the same as the one I planned to use for my controller (NodeMCU 1.0 ESP-12E). I then learned how I could import new parts to use in Fritzing, and eventually found the model I needed at https://github.com/squix78/esp8266-fritzing-parts/tree/master/nodemcu-v1.0.

With this, I had only to add my two push buttons and battery pack to the schematic and wire them to the development board. I hit another small road bump when I was choosing my button parts, as there seemed to be many four pin/terminal push buttons in Fritzing’s part library and I had no idea which one was the kind I wanted. This prompted me to do a lot more research about how my buttons work and the types of buttons there are before I could make a confident decision (I explain the specifics of what I learned in a section below).

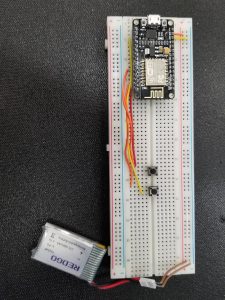

After the buttons were placed, the schematic was completed quickly and I got started with prototyping soon after. I tried making all the connections for the controller exactly as they would be in the printable controller model I would later design, but I realized that without the jumper wires and buttons actually being held in place by the controller shell, the wires would be troublesome to deal with because the pins of the buttons I used fit loosely in the female end of them, and could easily detach while testing. With this in mind, I decided to make my prototype on a breadboard and made a breadboard diagram on Fritzing using the same parts as in my schematic (right).

My next step was to learn how to actually use a breadboard. This took me a while, because even after I did my research on how current runs through the board, I made many small mistakes in wiring the buttons. Mostly, these were trivial missteps like having my jumper wires in the wrong holes, which caused me some frustration later when I uploaded an Arduino sketch to the NodeMCU and began testing. I also learned that some parts of the NodeMCU can get very hot when it is being powered, as I touched one of the metal parts of it during the process (it was not hot enough to burn yet, though).

Once I thought my connections were made correctly, I set up my Arduino IDE to have the NodeMCU 1.0 ESP-12E as a selectable board, and uploaded a sketch that enables the NodeMCU to communicate with a phone through a wifi network and makes the microcontroller in the development board respond to the signals from button presses by issuing a fire/move command to the game. I then launched the game on my phone to test the buttons, but they weren’t doing anything. This problem could have been with my wiring and hardware, or with the microcontroller not being able to communicate information to my phone over wifi.

To diagnose this issue, I added a few test lines in the methods that set the firing and walking conditions to true/false to see whether the button presses were even being detected and thus if my wiring was correct. I learned that instead of using System.out.println(), I could use Serial.println() and see my test message output in the Serial Monitor on the Arduino IDE, and later that I had to set the Serial Monitor to the same baud rate as my IDE’s upload speed setting to see the string that I want to appear in the monitor.

After I set this up, I found that my buttons were actually working as my firing and walking test messages were appearing. This led me to focus on what could be causing my microcontroller to not be able to communicate with the game, and I realized the wifi I was using was a school’s wifi and required an email address. Since the sketch I uploaded to the microcontroller accesses wifi only with the wifi name, IP address, and password, I tried my phone’s wifi hotspot instead. While my own hotspot did not work (the ESP-8266 could connect to it but could not communicate with the game over the wifi), the game worked perfectly when I tried others’ hotspots.

Controller schematic made on Fritzing

Controller breadboard diagram made on Fritzing

How it Works

The Development Board and Arduino IDE

The prototype I created is made of just a few major components: a NodeMCU 1.0 ESP8266-12E, two four-terminal push buttons, male to male jumper wires, and a breadboard. The NodeMCU is a development board with a microcontroller and an ESP8266 wifi chip in it. A microcontroller is a computer that can store memory and is used for a specific function which can be changed by uploading programs to it from a computer via a usb cable. The wifi chip allows the microcontroller to connect to wifi and therefore communicate with other devices. In addition to these parts, the development board has pins on it that can receive current from a battery and be used to connect the current to other electrical components such as the buttons used in this project. The Vin pin is what the wire from the positive terminal of a battery (usually this is the red wire) and is the terminal that can receive the highest current level on the board. Meanwhile, the Gnd pin is the ground pin that functions as a reference for what we will call zero volts of current (the black wire from a battery pack connects to this pin). A 3.7V Li-Po, or lithium-ion polymer battery is used to power my controller. The NodeMCU should only be able to handle up to 10V of current.

The program that my microcontroller uses to send information to the VR game based on button presses is what is known as a sketch, or a program written on the Arduino IDE. When uploading the sketch to the NodeMCU with a cable, a battery should not be used as the cable provides power to the board. When the board is connected, the Serial Monitor in the Arduino IDE can be used for testing and debugging purposes. It is a console where information and string from Serial.println() calls will appear; by putting the println() calls in methods that are called when a controller button is pressed, whether or not the println() message appears on the Serial Monitor when the button is being tested shows whether the button is wired correctly or not.

Buttons and the Breadboard

The push buttons used for my controller have four pins or terminals. Buttons function by completing circuit(s) inside when they are actuated, or triggered. A program or electrical component may be set up to react a certain way when the circuit(s) is/are complete, such as a light turning on when a light switch is flipped. The more technical term for these kind of buttons is momentary switch, which means that the circuit is being completed only when the button is being actuated; toggle buttons are an example of maintained switches, which complete or disconnect a circuit until the next time they are actuated. The type of momentary button used for the VR controller is double pole single throw (DPST), meaning that there are two circuits (double pole) in each of these type of buttons that are completed when actuated and a single orientation or way (single throw) in which they can be wired for the circuit to be successfully complete when actuated. For these buttons, their terminals must be connected to two jumper wires (female to female without a breadboard, male to male with a breadboard) on the same side; the other two terminals are there for mechanical stability, and connecting them to a circuit as well would cause a short circuit.

Because the buttons used are DPST, two of the terminals are not inserted into the breadboard. The two terminals inserted into the board are each wired to a gnd pin and either the D1 or D2 pin of the NodeMCU- the sketch executes methods to inform the game that firing or walking should be activated when pin D1 and/or D2 are receiving signals from the buttons. The breadboard is a type of board often used for testing electrical circuitry, as many common electrical components can be wired to the board without soldering. Current runs vertically (along the length of the board) through the power rails, or the two lanes on either side of the length of the board. Battery packs and power supplies may be wired there to supply power to the board. The middle two areas on the board has terminal strips, which are the horizontal rows of holes through which current runs horizontally. Separating the two middle areas is a trench. No current flows from one side of the trench to the other without jumper wires.

Starter Project

My starter project is the MiniPOV4, which is a device that uses LEDs to show a desired image that can be seen when the MiniPOV4 is waved quickly from side to side.

How it Works

Main components and their roles

The MiniPOV4 has consists of a printed circuit board (PCB) with various circuit components soldered on. This circuit board has a blue epoxy covering that encases a copper layer that conducts electricity. The source of electricity that provides a current to power the device is a battery pack connected to the MiniPOV4 using a negative and positive wire.

How custom images are able to be shown

The images that are shown by the MiniPOV4 can be uploaded and stored on the device. This data is stored in an Atmel ATmega328 microcontroller chip, which inserted in a microcontroller. The microcontroller chip also coordinates the order in which the LEDs will flash. New images can be uploaded from a computer to the MiniPOV4 using a USB cable connected to the USB connector on the MiniPOV4 and the computer. A 2.2K resistor notifies the computer of the connection when this is done, which allows the user to select the file location for the driver of this device. Since resistors are non-polar, current can flow through them in either direction, so they can be soldered onto the circuit board in whatever orientation. Polar components must be soldered on with careful attention to orientation because current can only flow through them in one direction. The computer must have the correct driver for the MiniPOV4 in order for this to work. Zener diodes, which are polar semiconductors, are used to maintain a consistent voltage level for the USB data lines, ensuring a smooth connection between the MiniPOV4 and the computer.

Other processes

The microcontroller sends signals to other circuit components in order for important factors such as how bright the LED shines to be regulated. A transistor boosts the signal it receives from the microcontroller to power all eight LEDs. Three 2.2k resistors act as base current resistors which maintain the amount of current flowing to the transistors on the circuit board to ensure that they are not overwhelmed. Eight 47 ohm resistors are used to determine the brightness that the LEDs will shine at by regulating the amount of current flowing into them; this prevents them from burning out by shining too brightly. Two 47 ohm resistors maintain the amount of current going through the USB connection.

A potentiometer sets the speed at which the LEDs blink by creating resistance to the current. This resistance is a result of the current having to travel through resistive material within the potentiometer- typically, this can be graphite, resistance wire, or cermet (substance made of metal and ceramic). A 12 mHz crystal is a crystal oscillator circuit that is used to keep track of time when the MiniPOV4 is running. The crystal’s vibrations create an electric signal with a consistent frequency. Specifically, it is this frequency that can be used for timing.

Finally, capacitors are used to smooth out the input and output voltage of the circuit board to make the MiniPOV4’s performance more stable. A ceramic capacitor does this by removing high frequency signals while two electrolytic capacitors do this by removing low frequency signals. The electrolytic capacitors are polarized, and are marked to show which side on the capacitor corresponds to which side on the PCB. The capacitors are able to filter either high or low noise because they have high resistance to the corresponding types of signals.

Experiences from Working on the MiniPOV4

My MiniPOV4 starter project surprised me in many ways. Going into it, I was excited as it was my first soldering experience, and I was ready to get hands-on with a subject I was so foreign with. This being said, the majority of setbacks I encountered stemmed from my inexperience with soldering. My first day of soldering was slow and my work was messy. Not knowing any better, I created solder joints that touched, and had trouble snipping my resistor leads with a wire cutter. This turned out to be a major issue moving forward, as I learned that solder joints that are not supposed to touch can cause a short circuit when current flows through as they share current in a direction that does not complete the circuit in the direction desired.

I spent a significant amount of time the day after desoldering messy solder joints that may have been touching (it was too messy to tell whether some places were actually touching because I had not been neat with my work), and learned how to use a solder wick and a solder sucker. I found the wick easier to use at first because I had trouble getting the solder sucker onto the area to be desoldered before it cooled and hardened. However, after I practiced using the solder sucker some more (I had a lot of chances to practice, unfortunately) I started to prefer it over the wick, as the wick would get hot when used for long periods.

Aside from desoldering, I came across a major problem when I tried to connect the battery pack to the printed circuit board (PCB). To do this, I had to first strip the red and black wires of the battery pack so that the exposed wiring could be soldered onto the PCB. However, I was not careful with the wire stripper and actually snipped off a large portion of the wire, filament and all. I then needed to move further down the wires and strip them from there, meaning that my wires were shorter than they should be. After this occurred several times, I needed to replace and extend the wires so that I could solder them onto the PCB safely and with a comfortable length to hold the MiniPOV4 with. I used a spool of wire I found and learned about flux, which is a liquid that can make a metal surface more attractive to solder. I needed to learn about this so that I could proceed with extending the wires I cut short, because I had a spool of wire I wanted to “glue” to the wires on the MiniPOV4 battery pack; when I had tried to apply solder to the wires without the use of flux, the solder would not leave the surface of the soldering iron. With the flux, I was able to make the connection between the wires.

Aside from the poor solder job that I had to fix, and learning how to improvise when my wires were cut short, I also learned an important lesson when my MiniPOV4 would not work the first time I tested it. At that point, I had to check every solder joint and connection again, which led to a wild goose chase where I unsoldered and resoldered many joints in an attempt to find the cause of the problem. In the end, the problem turned out to be the batteries; when I put the cover of the battery pack into place, sometimes the batteries would pop out of place. This was very surprising to me, as I was sure it was an issue with my soldering, and not something so simple as my batteries moving out of place. The lesson that this taught me is that I should try to keep my mind more open to the cause of the problems I face, not just what plans I have going into a project.