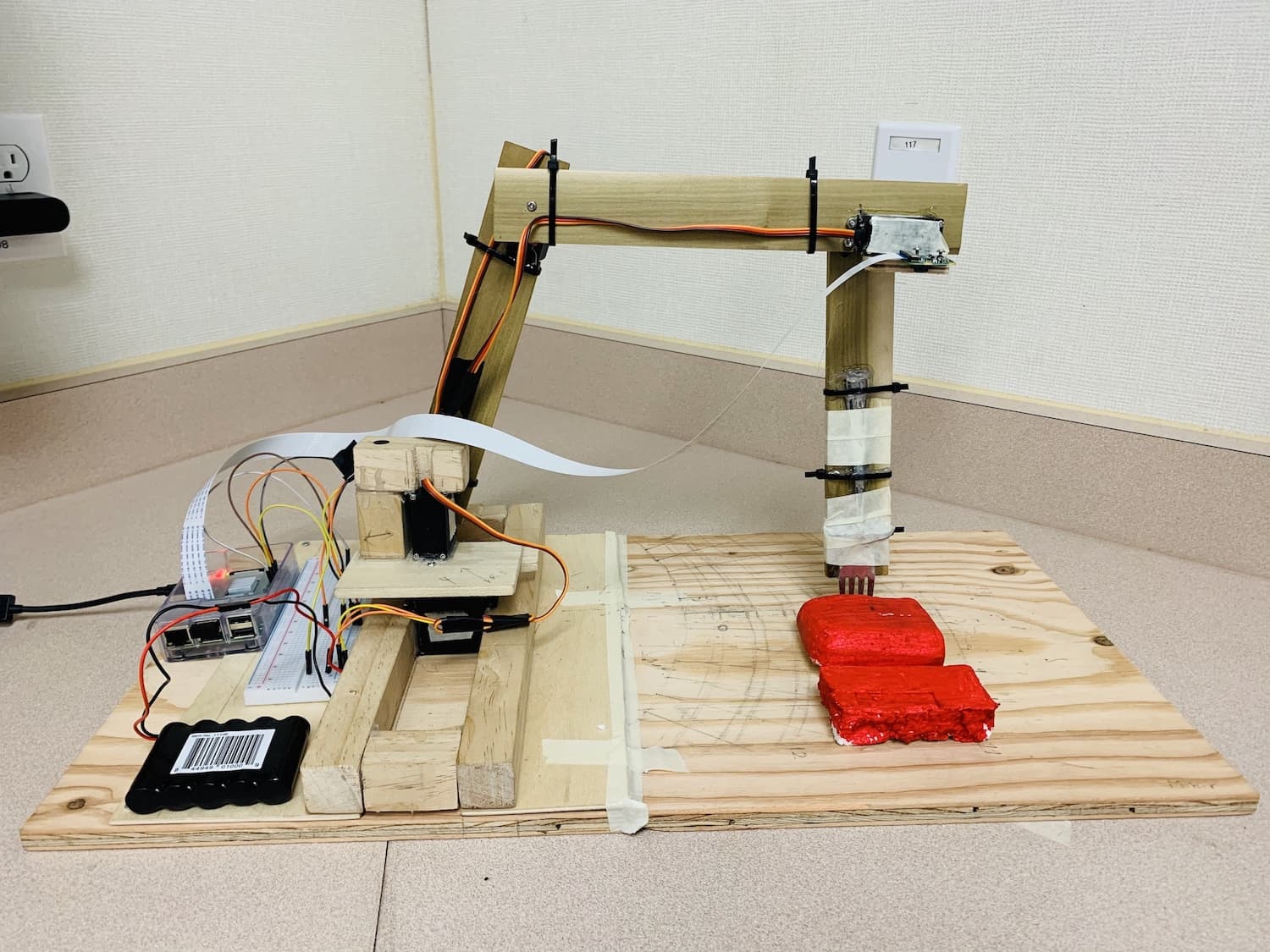

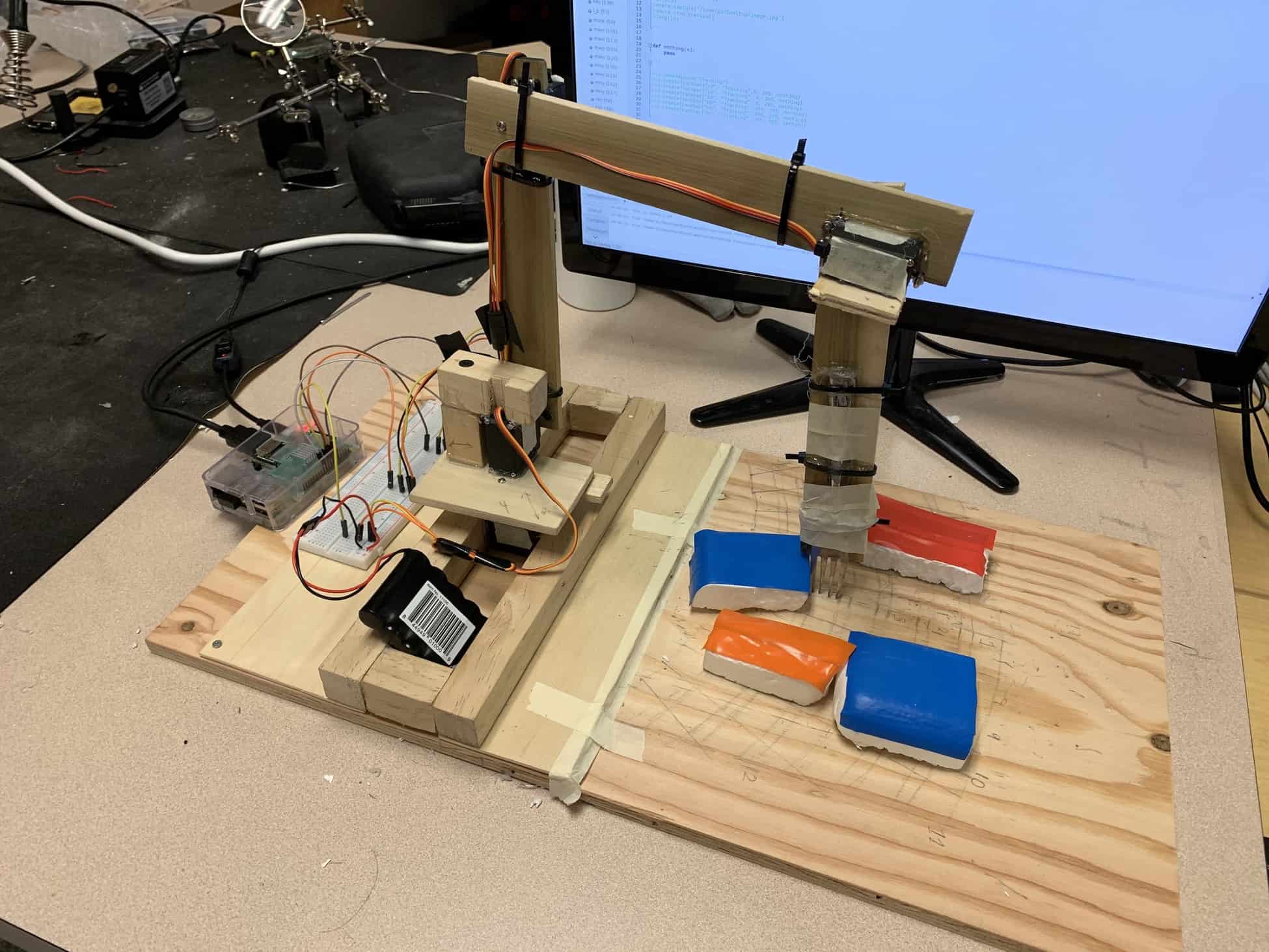

My second milestone was to get to build the actual arm and then program the 4 servos to work together using inverse kinematics to move to any set location on a plate. Here is a quick demo:

For the time being, I have created a grid on top of my plate to act as a way to identify a certain location and correspondingly move to it. The program requires an x coordinate or a column number and also a y-coordinate or a row number. As you can see the see, as soon as I input the coordinates, the arm lifts up to a set, standard location and then adjusts each of the motors and then begins to move down. Currently there are pencils in the place of the fork, which are used to measure the accuracy of the arm. After the arm goes down to pick the food, it moves up to a pre-assigned location, close to the users mouth.

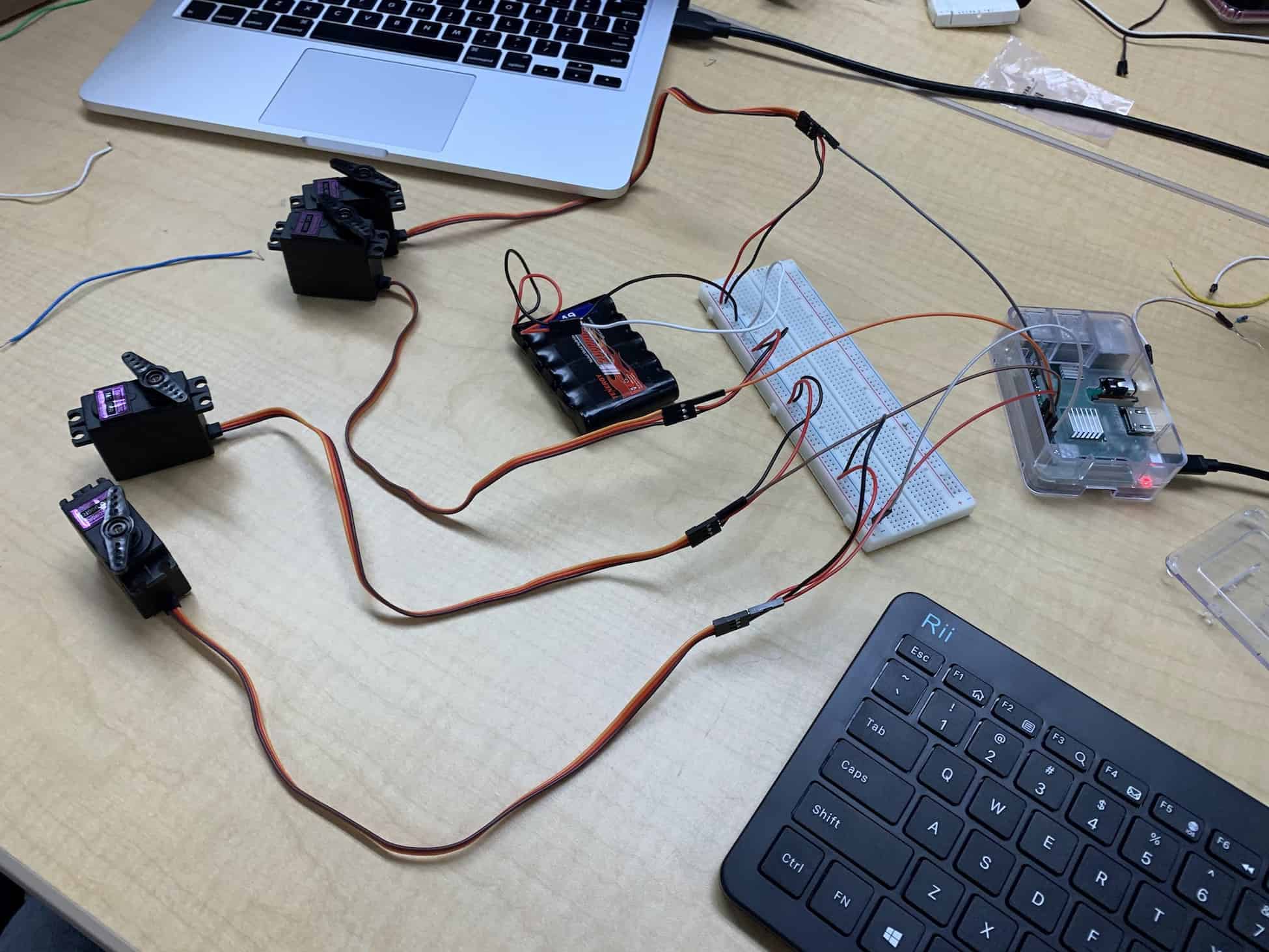

This milestone was heavily focused on constructing an arm that ensures the servos have enough torque to move the arm and up and down while also preserving the accuracy when moving to the center of a food piece. Over the course of the last few weeks, I have constructed a few designs and went through multiple iterations when figuring out the best, most minimalistic design to hold the servos in place. There are 4 servos moving in different directions, that allows the arm to reach a large radius and every possible spot on a plate placed in front of it. The first motor is on the very bottom, and rotates the entire structure in a 180 degree radius. The other 3 servo motors, move in the same axis but work together to move to a certain spot.

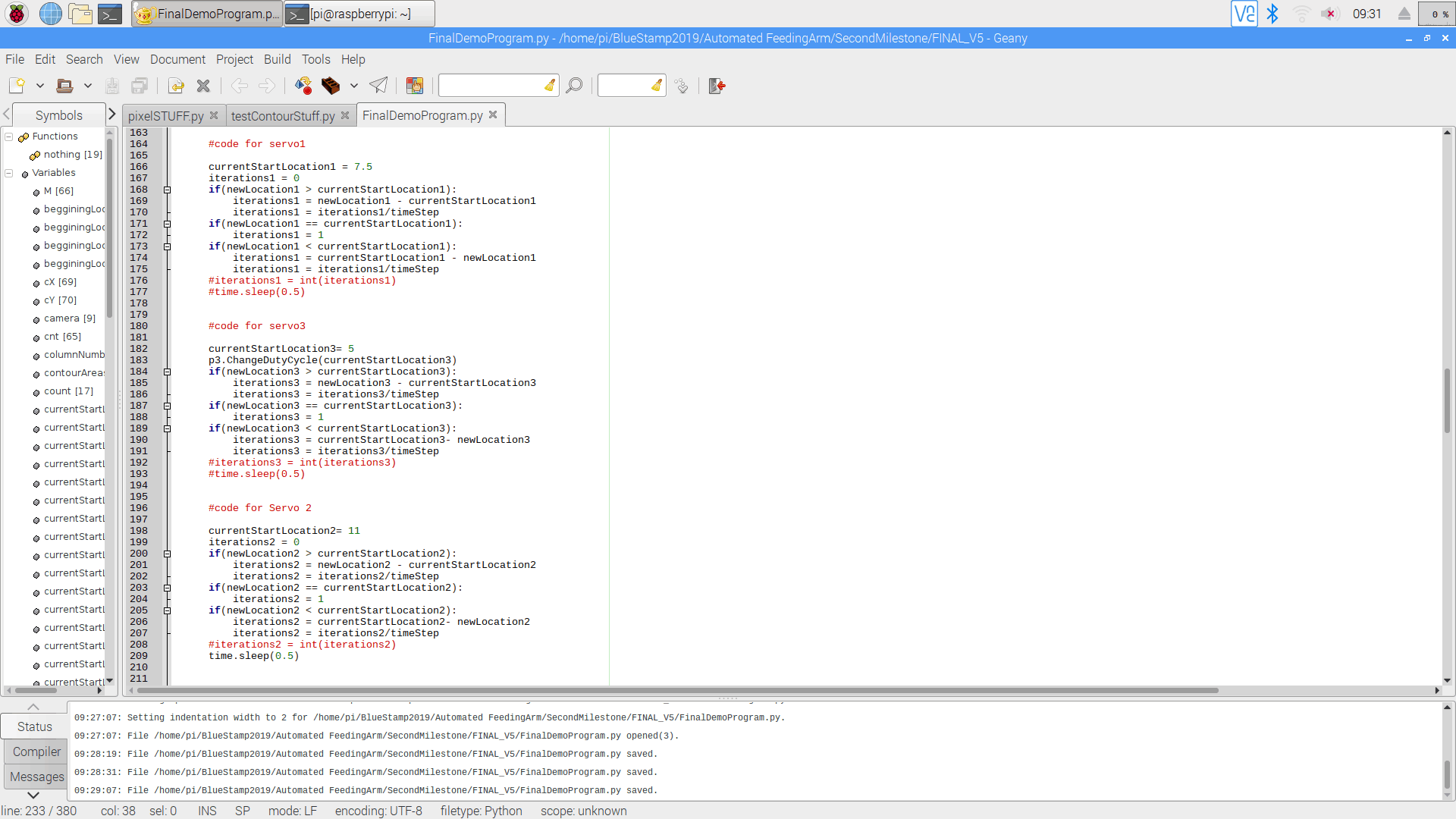

I used inverse kinematics to control the first 3 servos. Inverse kinematics is the use of kinematic equations to determine the parameters that provide a desired position for each of the robot’s end-effectors. So in order to achieve a certain location on the grid, the first 3 servos each need to move a certain amount.

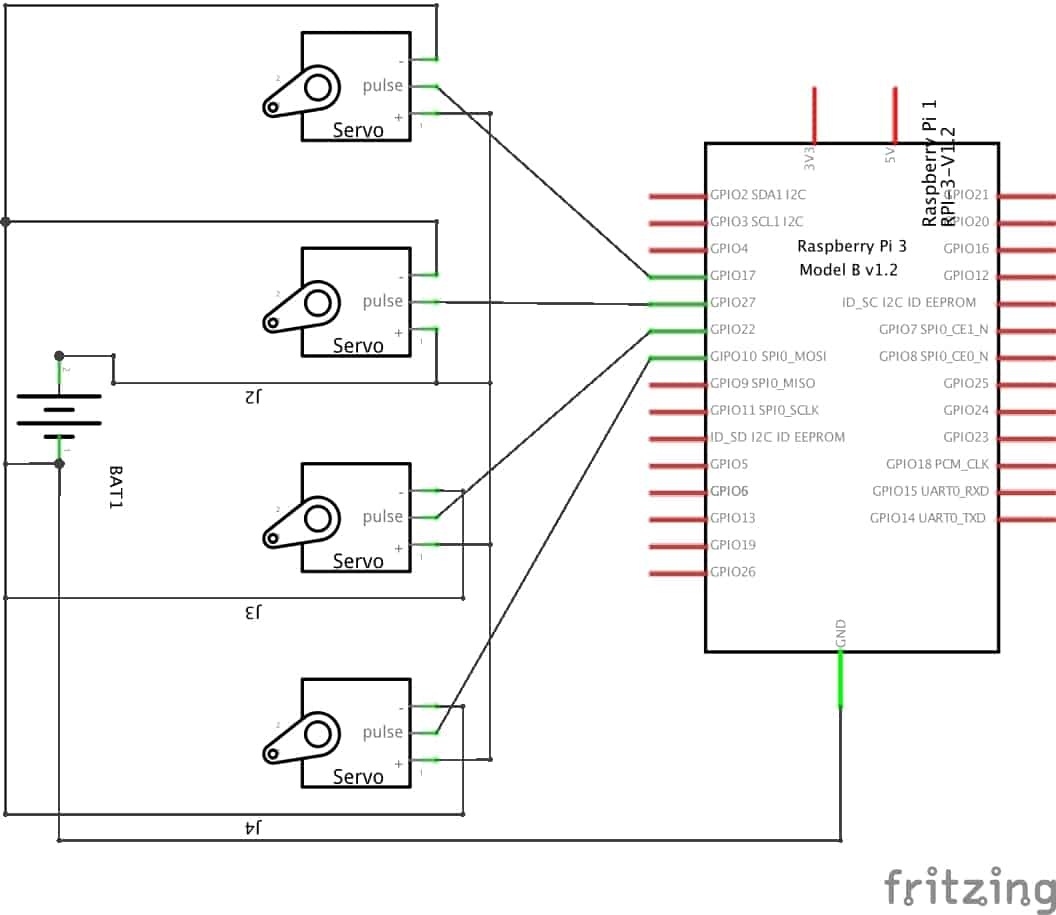

Currently, the circuit is composed of servo motors and a raspberry pi. The wiring has not changed since my previous milestone. There are 4 servos each 3 pins each which need to be connected to ground, an external power source and a GPIO Pin on the Pi. I used the generic GPIO pin code, I was able to use a for loop to move the motors back and forth.

I faced many struggles when creating the arm. My first design was heavy, ineffective and unnecessarily large. I ended redoing the entire design while also adding multiple support to balance the weight of the arm.

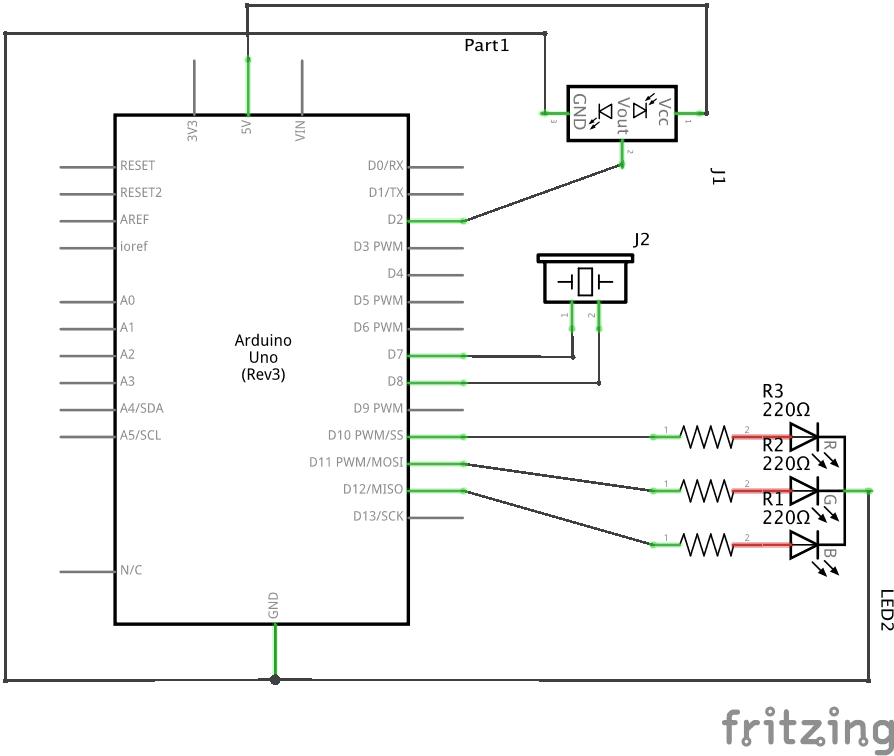

Now that the structure of my arm is up and running and I can easily input the coordinates and the arm is able to move to it, the next step is to attach a camera and using opencv to detect the food and correspondingly move to it.